20241007, Day 51

Halfway

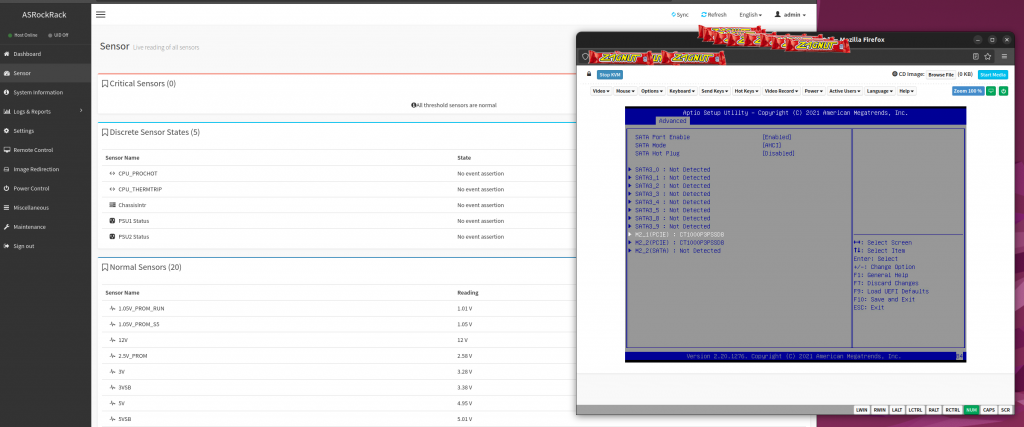

Installed 2TB of m.2 on the server board, and one of the 40GB NIC’s. IPMI is great, and boots faster than iDRAC.

20241008, Day 52

Hard lessons day

The server board is a bit dicey with it’s onboard video and/or AMD wanting to have non-free firmware loaded, despite being able to cheat around terminals and get a desktop anyway. This won’t matter once it’s a compute node, but I was hoping to test things easily in GUI.

Testing 40Gb is not going to work like I thought, admittedly I was being real optimistic/naive but there would be big hardware & software problems with what I wanted to do. Best bet is to fire up the enterprise nodes and test cards between them.

Although I haven’t done any bit-flip tests the server board’s CPU is at least recognizing multi-bit ECC.

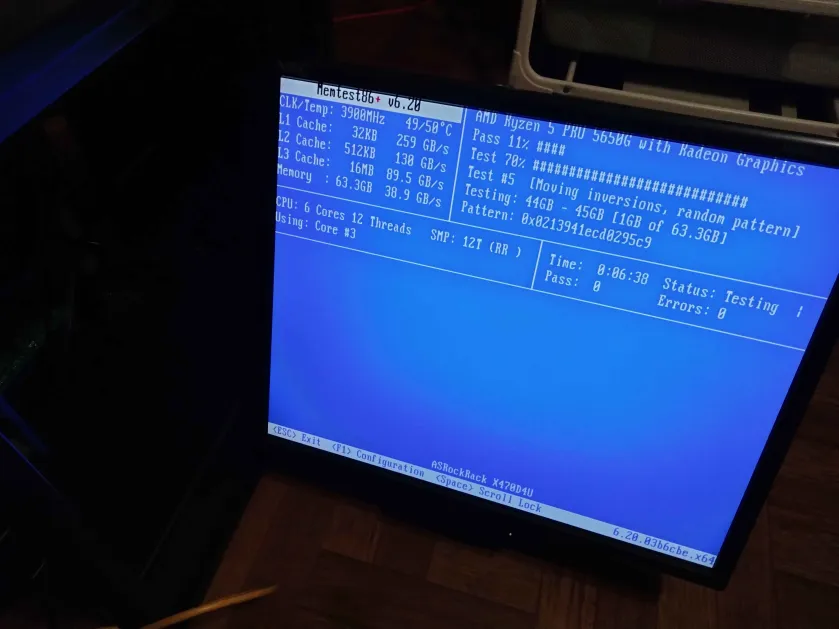

Might as well put the lid on the case and let things cook overnight.

20241009, Day 53

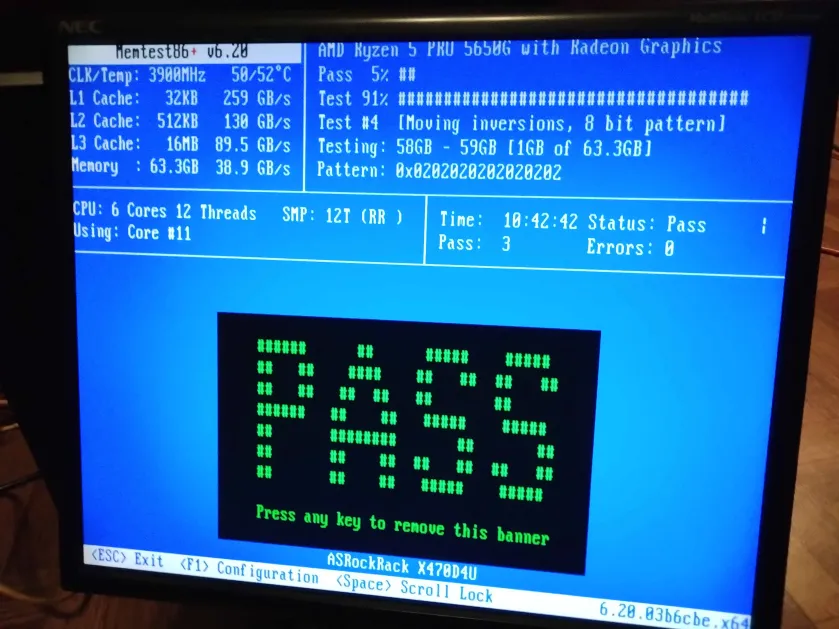

So. Did it pass?

Me: I will now put the lid on the R730.

R730: So I see you’ve pressed the power button

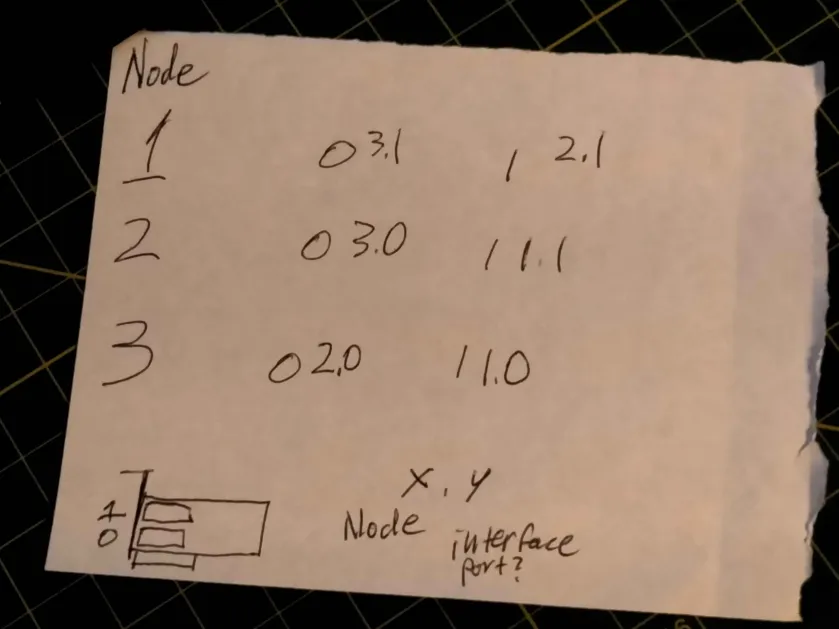

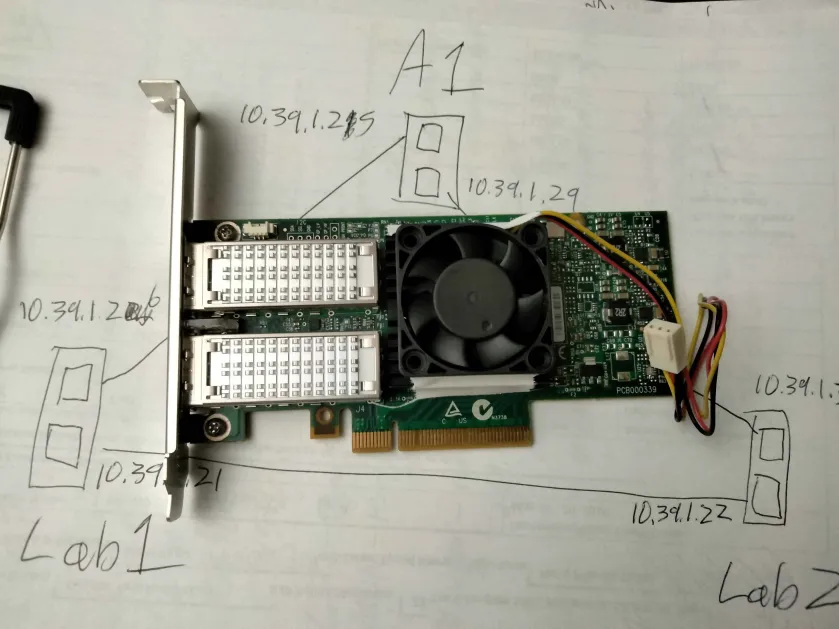

I even hooked them up using jank…

And a really great map

20241010, Day 54

Fixing up user accounts on IPMI and getting it a static address which felt HUGE because everyone at work leaves them on dynamic and and it’s my job to get their management back when the reservation invariably screws up.

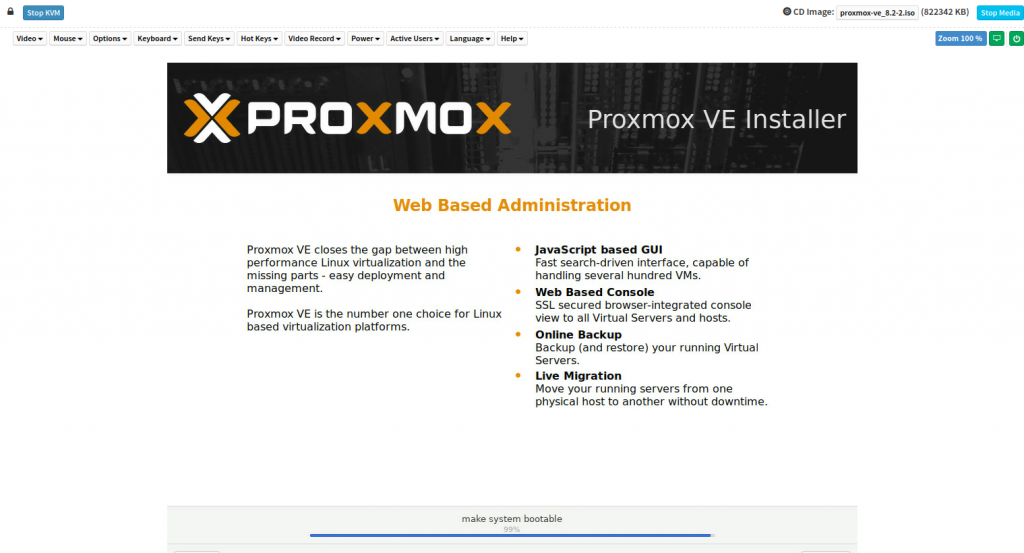

Then loaded Proxmox.

Only so much time in a day, most of these days are over an hour as well.

20241011, Day 55

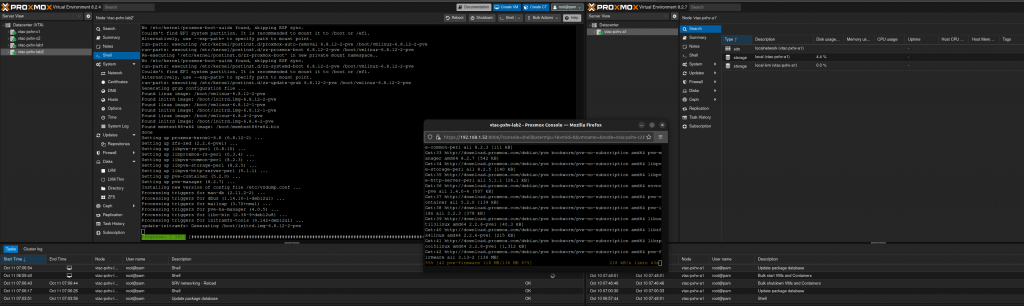

Fired everybody up and got them updating

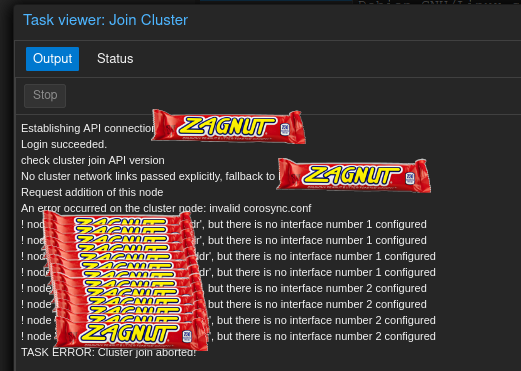

All set to have a fun time joining a fifth node until

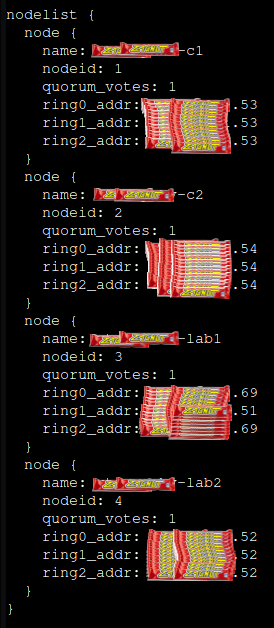

Some adventures with Corosync a few days ago messed it up. I might have to simulate my way out of this one.

20241012, Day 56

I figured out what was wrong with my links configs, and I figured out why the whole cluster would die when fixing the links.

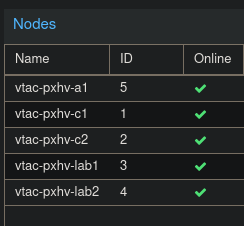

And the cluster is now five wide.

Huck

20241013, Day 57

Well,,,, uhhhhh

Had a stack of bad luck trying to get mesh networking going between three hosts. It starts out as three point to point networks, but got complicated quickly with exotic network cards, new-to-me era hardware, questions about used gear and network troubleshooting of niche configurations.

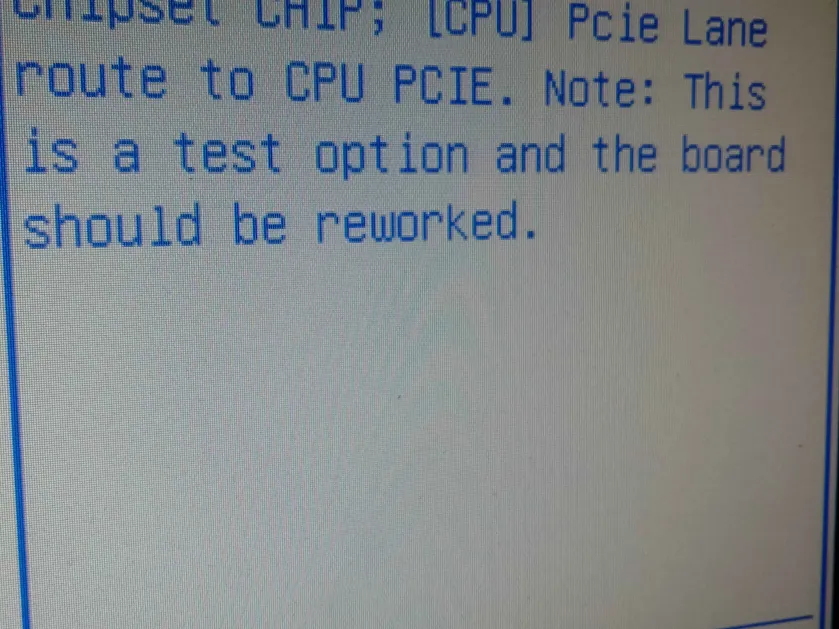

Even found a BIOS option that didn’t belong there and causes system to not boot if set.

One of those days where you turn the equipment off and walk away; I’ve got more ideas, but it’s not going to work quickly, and unfortunately I’m not going to stop trying.

20241014, Day 58

Got pings across the mesh network. Might be proofing and testing for a day or two. Time for actively cooled NICs

20241015, Day 59

I bested the mesh, all hardware tested, including the spares. Now I can finally leave good feedback for that eBay seller.

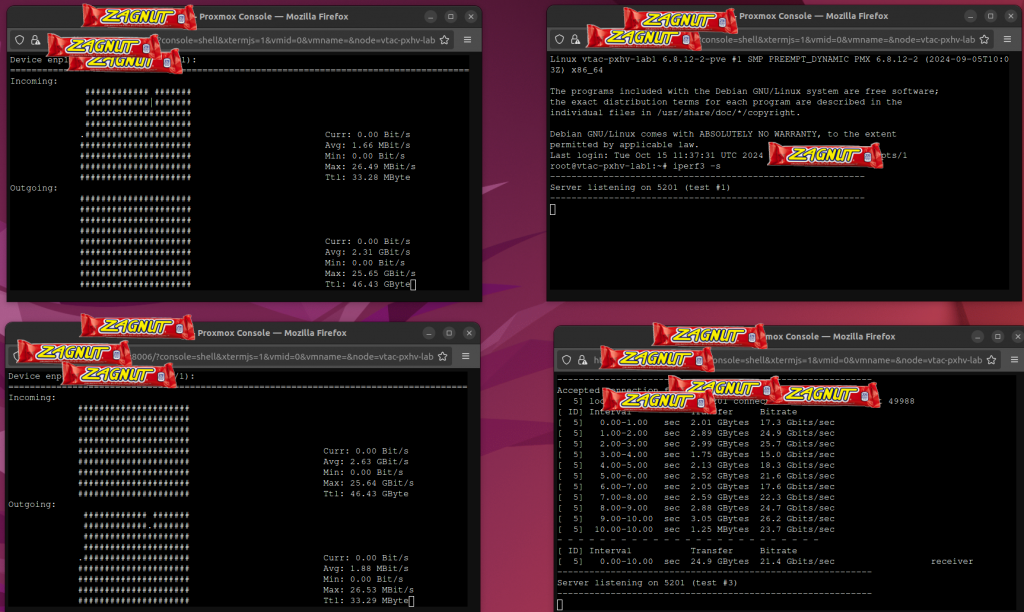

Not quite getting the 40Gb/sec, but I have tweaked anything. Frames are at 1500.

20241016, Day 60

I’ve moved cluster traffic* to the high speed mesh network. It’s so cool to see the little traffic blinking~

*Traffic for the three enterprise & block two class nodes, as they are the only three servers with 40Gbit NICs. All five nodes can talk over gigabit, but nodes with 40Gbit connections to each other will prioritize that.

20241017, Day 61

Running another simulation before installing ceph to cluster….

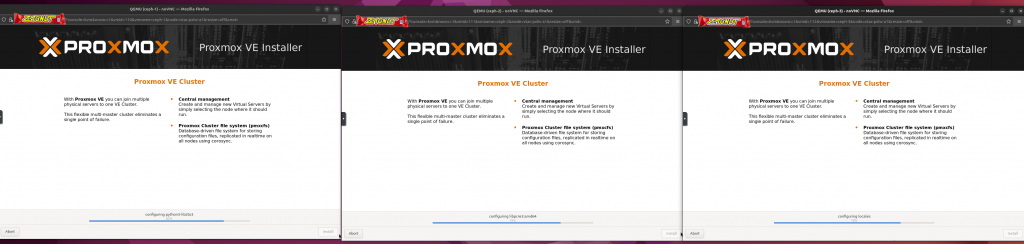

Loaded up another Ceph test cluster.

Point to point Coro mesh on 40Gbit: over a day to set up

Point to point Coro mesh in VM’s: an hour

20241018, Day 62

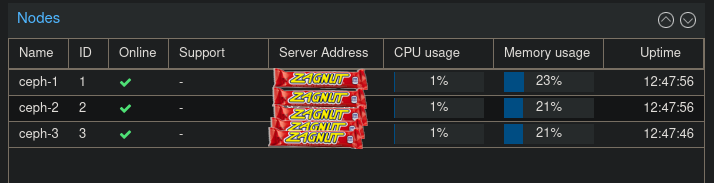

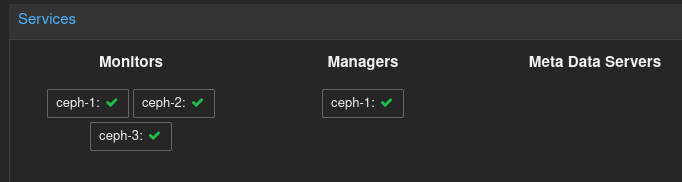

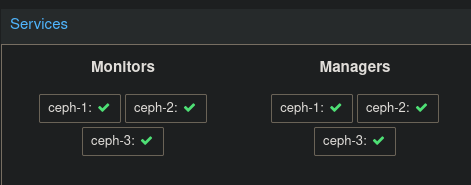

Ceph is again running in test…

Reading into the moving the private traffic onto it’s point to point networks. Setup allows only vanilla single IP for traffic, anything beyond that you need to add to config files.

20241019, Day 63

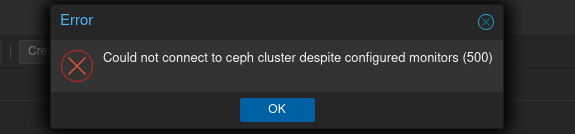

And Ceph is not running, I broke it good trying to cluster-ize it

That’s labbin, you make stuff you break stuff. I’ve got some leads on what went wrong and can even roll back to snaps if it’s that bad.

20241020, Day 64

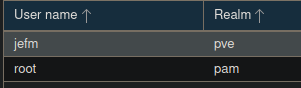

Took a moment to look at PVE groups. Now I don’t need to log in as root all the time.

This week’s cat: Scone

20241021, Day 65

I have either: found a flaw with how I thought fast clustering was going to work, OR found that cluster networking is not capable of doing what i thought it would do, OR just munging something someone else did into something that i want it to do.

Got it started but time for bed. For a laugh, Ceph is back at least, but only in simulation and not storing anything.

20241022, Day 66

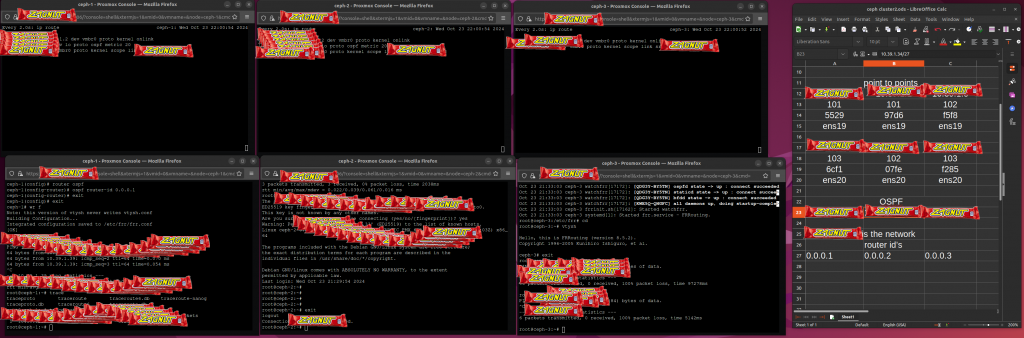

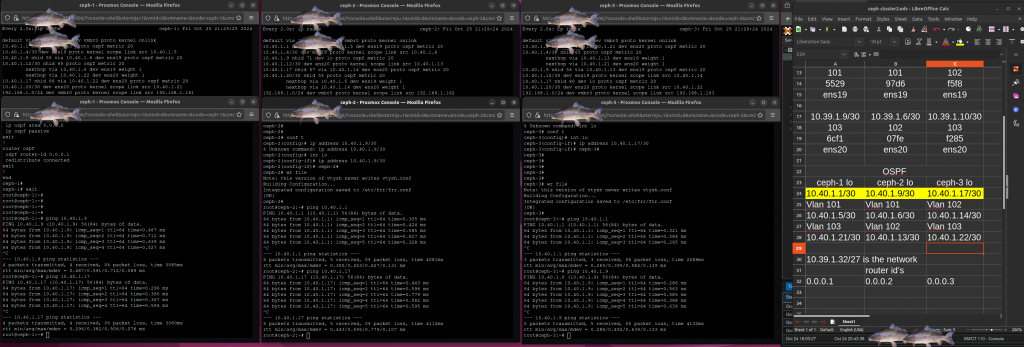

I had eye-opening morning with frr and vtysh, and got OSPF to go. I worked out some low lying misunderstandings of how I thought Ceph clustering would work, wound up breaking a chunk of things and am redoing the cluster experiment with more granular snapshots.

20241023, Day 67

Lab sim is booted back up, writing the frr runbook and found OSPF acting very odd. If it was doing this a few pages ago, no wonder Ceph OSD’s were flipping out…

20241024, Day 68

I fixed the OSPF

It’s routing two nodes and is great but i’m too tired for the third

20241025, Day 69

I did the thing

“When in doubt, add another subnet”

20241026, Day 70