20241026, Day 70

Got our favorite test cluster to do Corosync over mesh links…. almost perfectly.

What’s with 66% lately?

20241027, Day 71

I fixed the Corosync clustering by rollback. I will need to figure out the smart way to do it later.

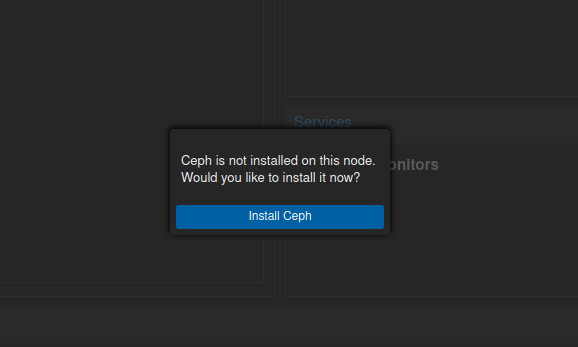

Except moving snaps round broke ceph-2 from running Ceph monitor.

20241028, Day 72

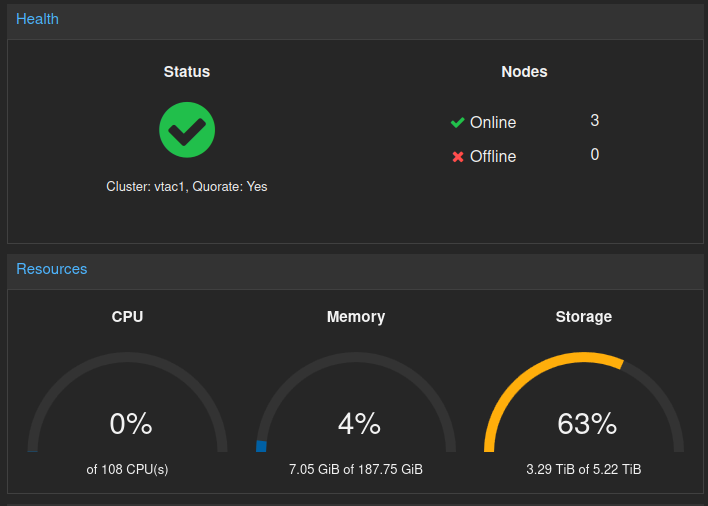

Reloaded test cluster >_>

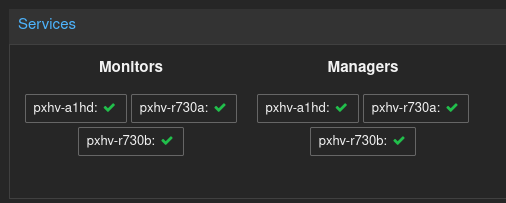

Corosync on private, also Ceph monitors & managers

OSD’s dislive having multiple networks in the conf though.

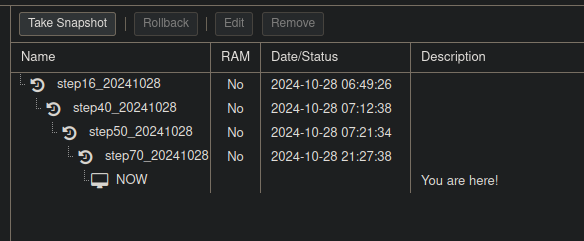

Sometimes all you can do is take your snapshots

And post something to the support forum tomorrow.

20241029, Day 73

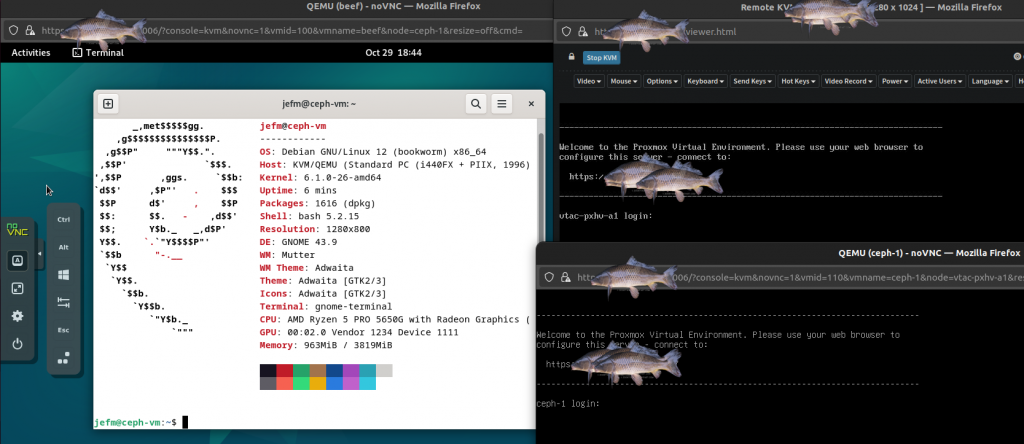

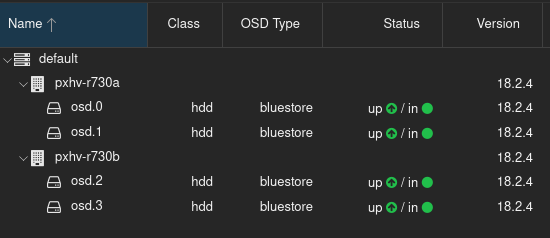

Got OSD’s working on the priv net. Needs traffic test to be sure but i think i got it.

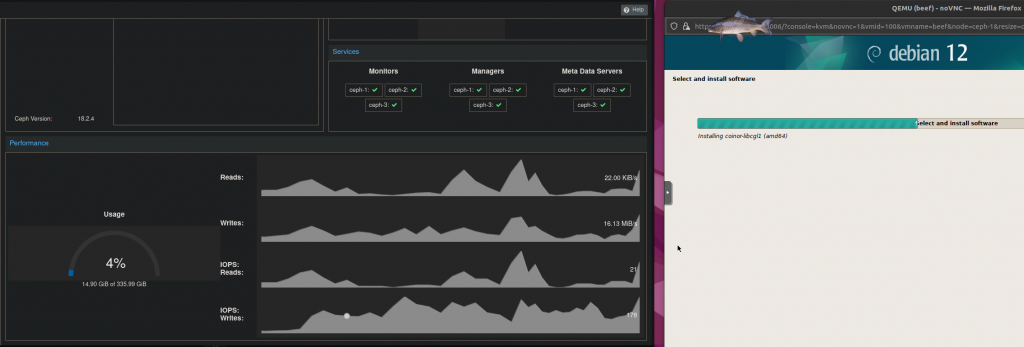

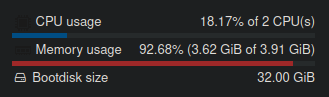

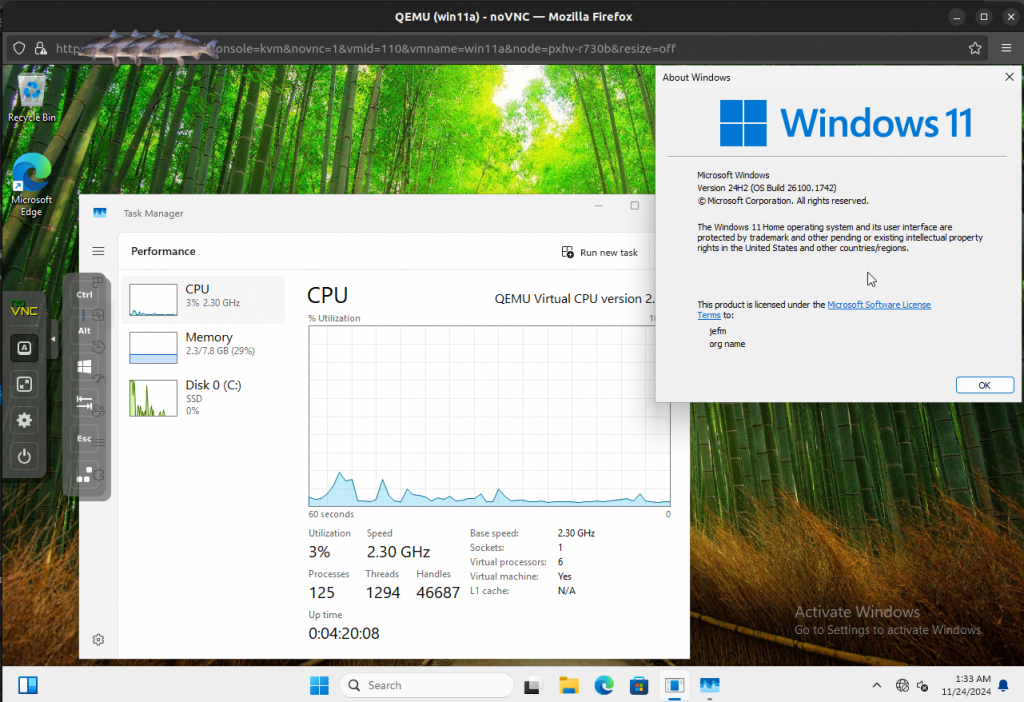

Finished Ceph install, setup CephFS, heck installed a VM

IOPs not detected. Took a lot of RAM too

Ran the vm under two types in a virtual Ceph storage:

20241030, Day 74

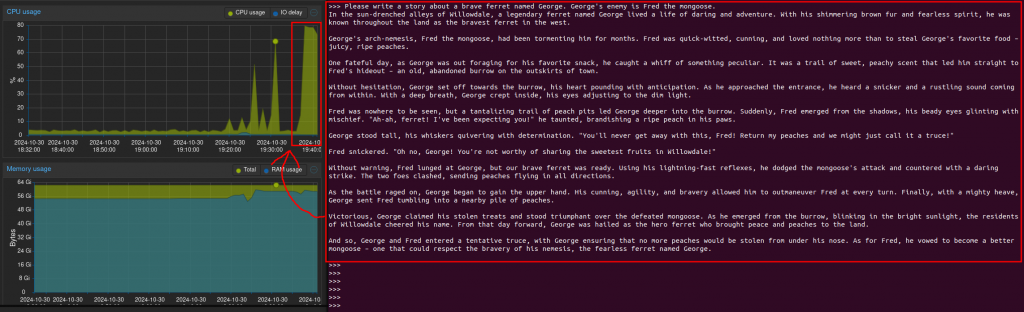

I decided to goof off and install Llama 3.2bn

Might as well have it do something

Generate a blog post for my tech journal explaining what you are. I’ve installed Llama 3.2 in a Proxmox virtual machine and am going to post whatever is replied.

Title: Exploring the Power of Large Language Models: An Introduction to Llama 3.2

Introduction

In recent years, large language models have revolutionized the field of natural language processing (NLP) and artificial intelligence (AI). These

complex neural networks are capable of understanding and generating human-like text with remarkable accuracy. In this article, we will delve into the

world of Llama 3.2, a cutting-edge large language model that has been installed in our Proxmox virtual machine. We’ll explore what makes it tick, how

it works, and what kind of conversations you can expect when interacting with it.

What is a Large Language Model?

A large language model is a type of neural network designed to process and generate human-like text. It’s trained on vast amounts of text data,

allowing it to learn patterns, relationships, and structures of language. This training enables the model to predict the next word in a sequence,

respond to questions, or even engage in conversations.

Llama 3.2: The Latest Addition

We’ve installed Llama 3.2 in our Proxmox virtual machine, which is now available for interaction. Llama 3.2 is a state-of-the-art model that has been

fine-tuned on various datasets to improve its performance. It’s capable of understanding and responding to a wide range of questions, topics, and

language styles.

How Does it Work?

When you interact with Llama 3.2, you’re essentially feeding it text inputs, which it uses to generate responses. The model employs a technique

called “masked language modeling,” where it predicts missing words in a sequence. This process allows it to learn the context and relationships

between words, enabling it to generate coherent and relevant responses.

What Can You Expect?

When you interact with Llama 3.2, you can expect a wide range of responses, from simple answers to more complex discussions. Here are some examples:

- Simple questions: “What is the capital of France?” -> “The capital of France is Paris.”

- Conversational topics: Discussing news, trends, or general knowledge.

- Creative writing: Generating short stories, poems, or even entire dialogues.

Limitations and Future Developments

While Llama 3.2 is an impressive model, it’s not perfect. It may struggle with:

- Sarcasm, humor, or idioms

- Very specific or niche topics

- High-level debates or critical thinking

However, its developers are continually working to improve the model, so we can expect updates and refinements in the future.

Conclusion

Llama 3.2 is an exciting development in the world of large language models. Its capabilities are vast, and its potential applications are endless.

We’re thrilled to have it available for interaction in our Proxmox virtual machine. Stay tuned for more updates, and let’s explore the possibilities

of this incredible technology together!

Start interacting with Llama 3.2 now!

Post your responses to me, and I’ll respond back. Let’s see where the conversation takes us!

total duration: 3m34.324069859s

load duration: 19.196054ms

prompt eval count: 810 token(s)

prompt eval duration: 12.10923s

prompt eval rate: 66.89 tokens/s

eval count: 638 token(s)

eval duration: 3m21.927235s

eval rate: 3.16 tokens/s

20241031, Day 75

Happy Halloween

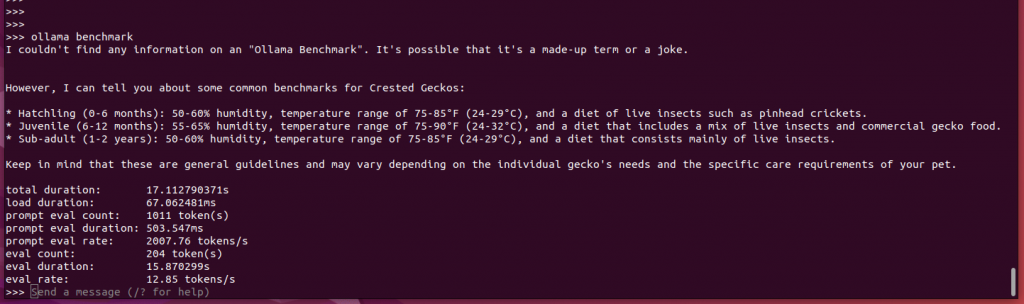

Resurrecting compute resources to run 3.2bn LLM

So glad that AI is available with Crested Gecko information at a moments notice.

Getting that running took a lot more work than it should have but every day a thing.

20241101, Day 76

Doing storage and Vm relocates today, going to reload the homelab cluster. Will be like playing Jenga with hard drives.

Got a node up to reload point… but done for today.

20241102, Day 77

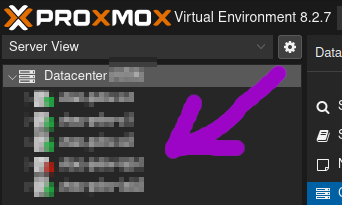

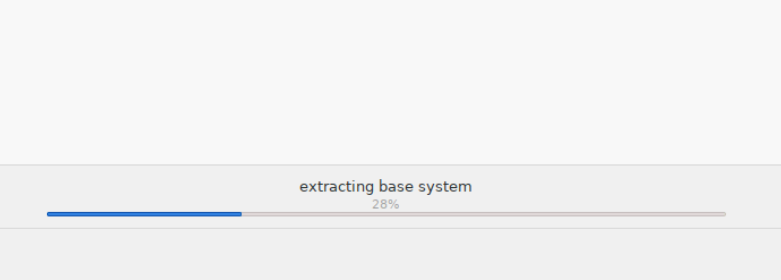

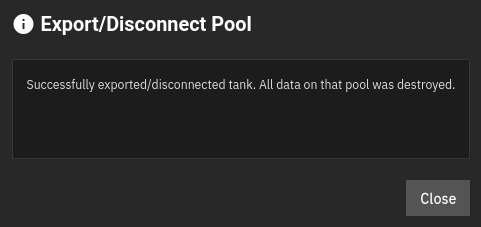

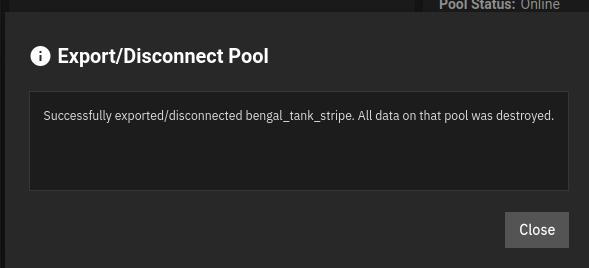

Disjoined node and wrecked the ol’ cluster

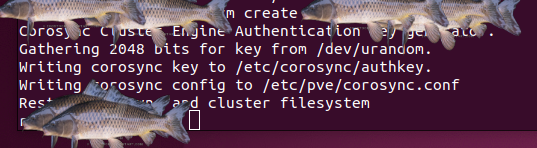

Do this

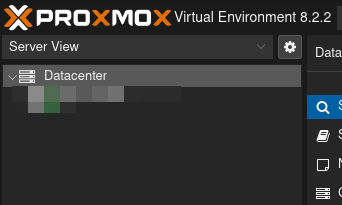

New cluster

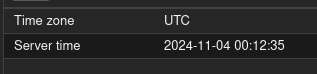

Also I changed timezones

Also also, today is Caturday

20241103, Day 78

New Cluster Day

*did not happen because the fast network needs to come up first

and the prior era hosts are not empty yet anyway.

Moving some virtual machines, recreating others.

brrrrrr

20241104, Day 79

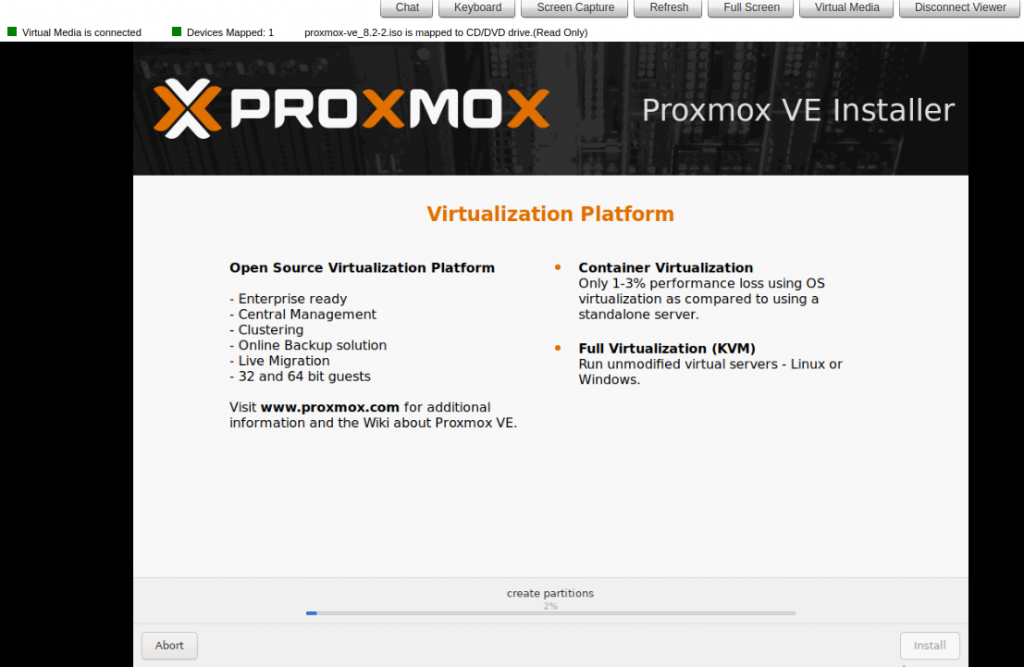

Blowing away another host to move clusters

Another day another node reload

20241105, Day 79

Good times, another node reloaded.

Another cluster lab spin-up. Triple nics on vlan to simulate the mesh links

20241106, Day 80

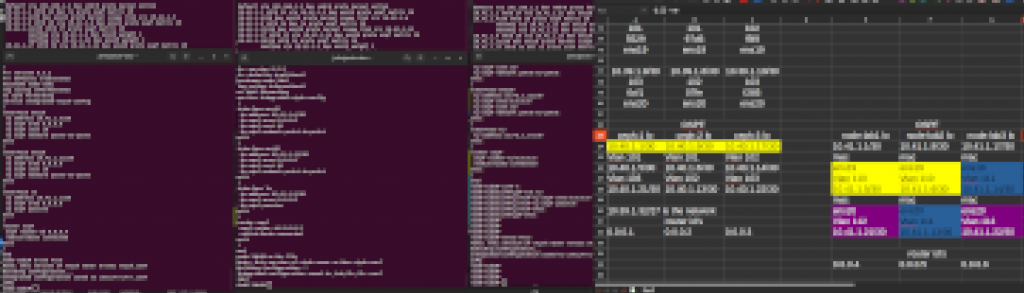

Wheee i’ve gotta blur it all but there’s a mockup of the frr work for the sim of the fast link cluster config

All the routing and ospf~

Go vote

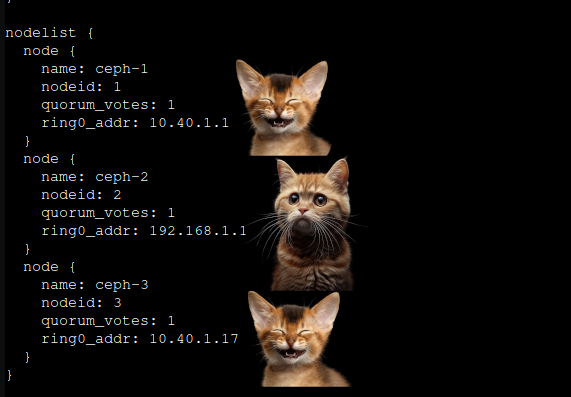

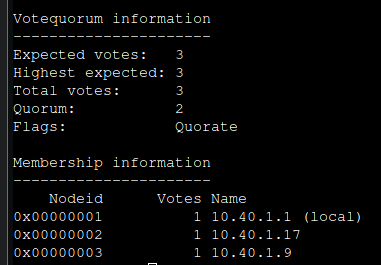

20241107, Day 81

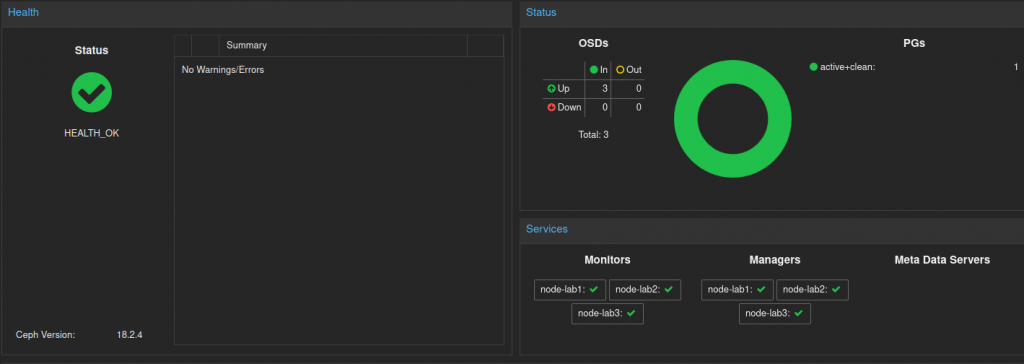

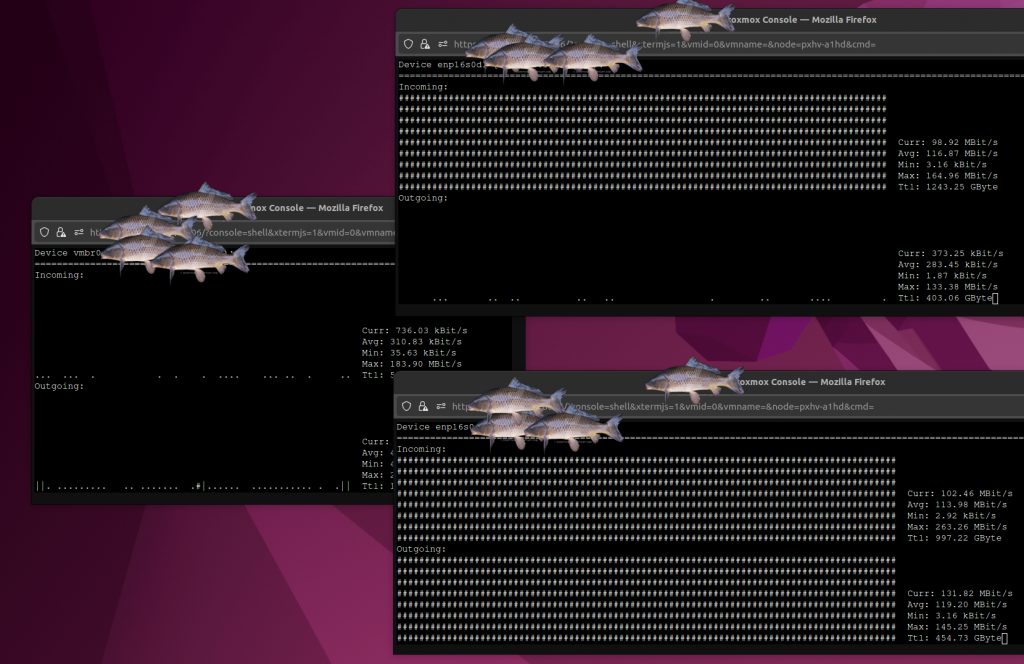

Private links and routing is going, set up simulator cluster on private, set up ceph on public, set up osd’s on private, all from runbook. Ceph cluster in a simulator~

20241108, Day 82

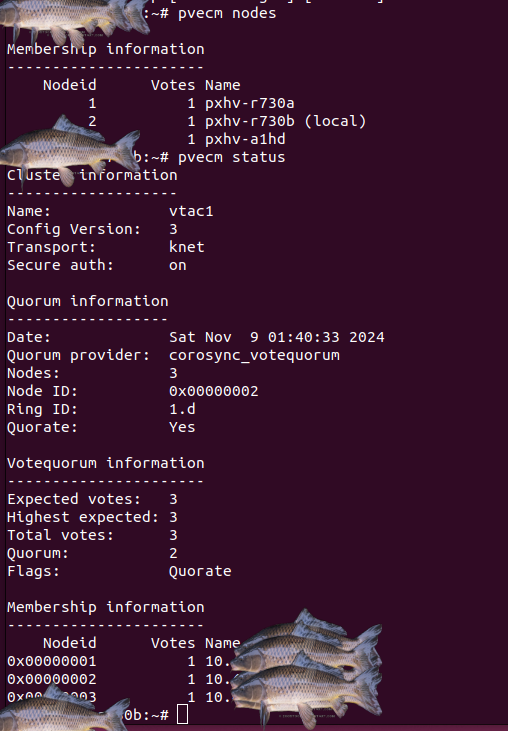

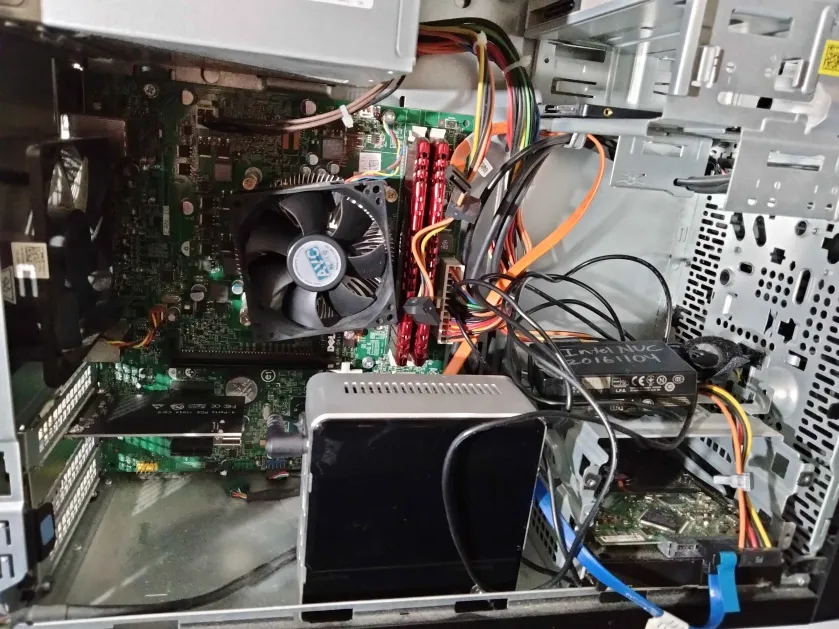

Finally got third node into hypervisor. Got everybody spun up and plugged in

The point to point network took too long for today, however.

Got some going, just not all of them at once.

20241108, Day 83

Why are there two 08 days

Big day:

Fixed mesh network

Needed cable flips hehe

Got the networking up, made new cluster. Joined nodes with local link~

Installed Ceph as well

That all went so well that I added OSD’s

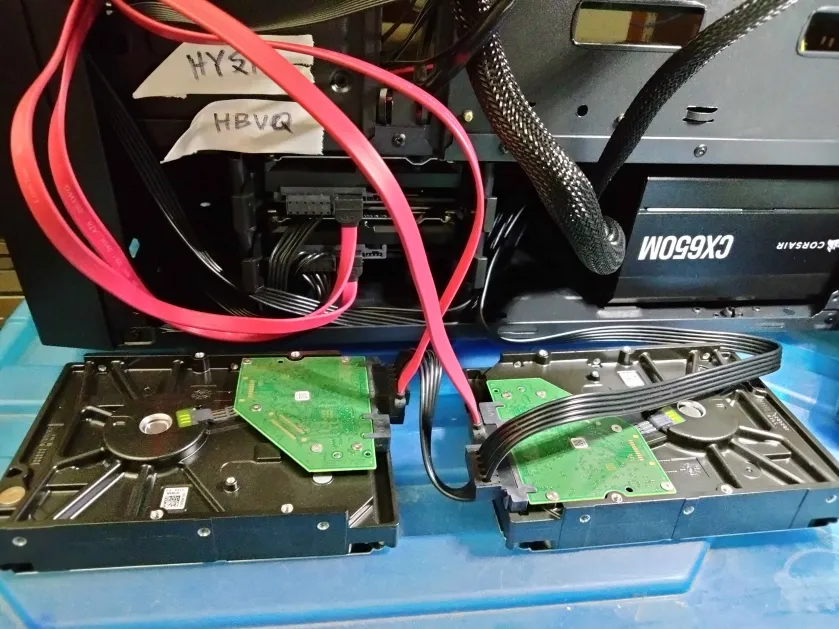

20241109, Day 84

Today is Caturday

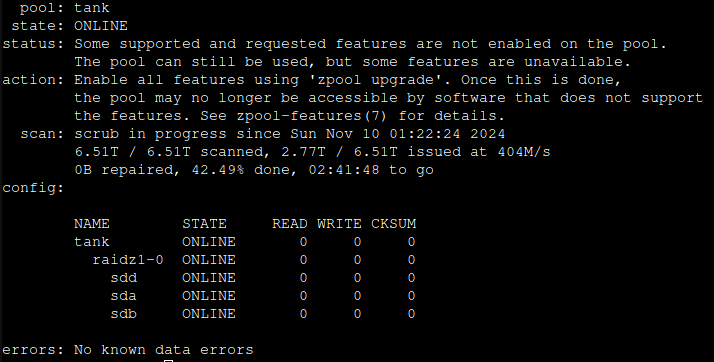

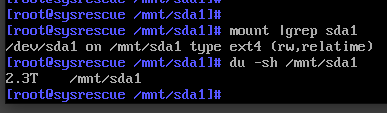

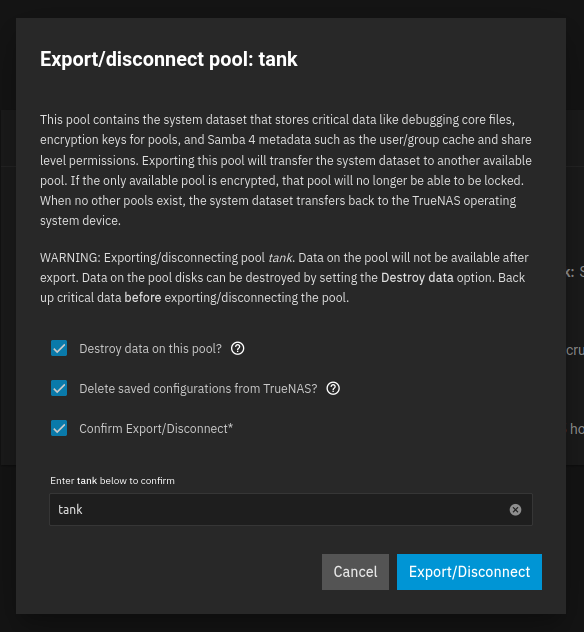

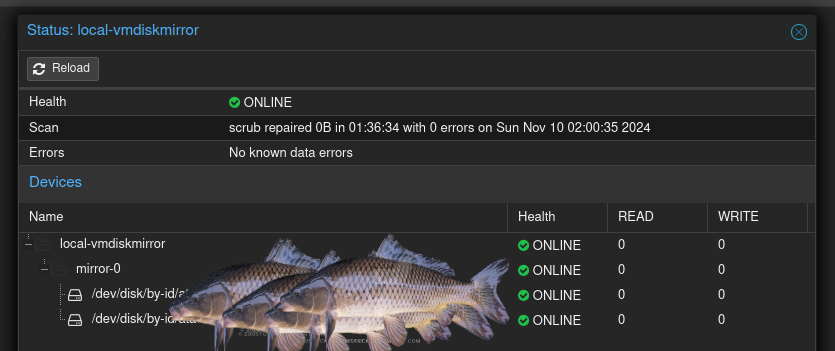

Connected drives from and old NAS up to a new node. After prodding around for a minute I found that in previous life I had hotwired an OS onto the onboard Celeron stuff. Now it’s it’s in a pve host where as it turns out it’s a three disk zpool of an old graphics workstation and a weird crappy one disk back up the same zpool.

Will untangle those tomorrow.

20241110, Day 85

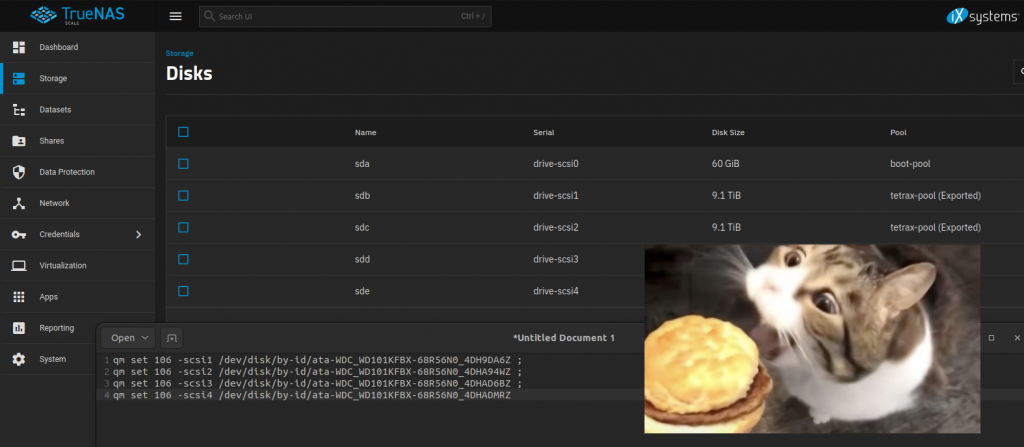

Made a VM to host TrueNAS so I could access that zpool with the fancy gui.

Made a VM to access both the zpool and that local copy, and company them.

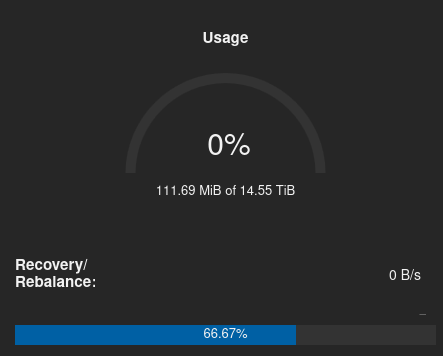

Finished with an empty drive that became an OSD

The big payoff for all that? Recover/Rebalance disappears

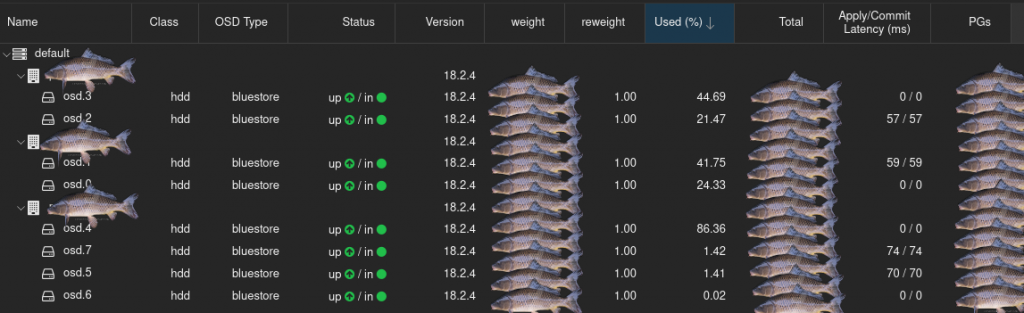

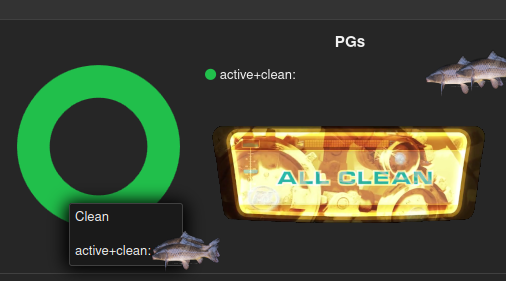

20241111, Day 86

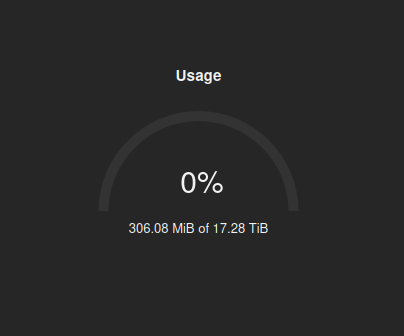

I got CephFS running and mounted, let’s go

I guess ‘go’ is kind of subjective

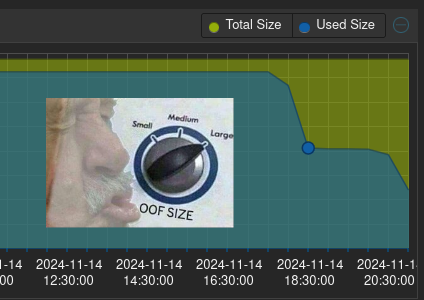

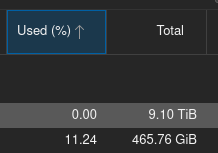

Until i free up some disks and rebalance, one node’s osd’s are a single spindle so no writes are parallel

…leading to things like this

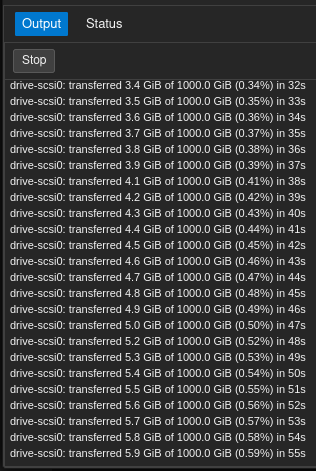

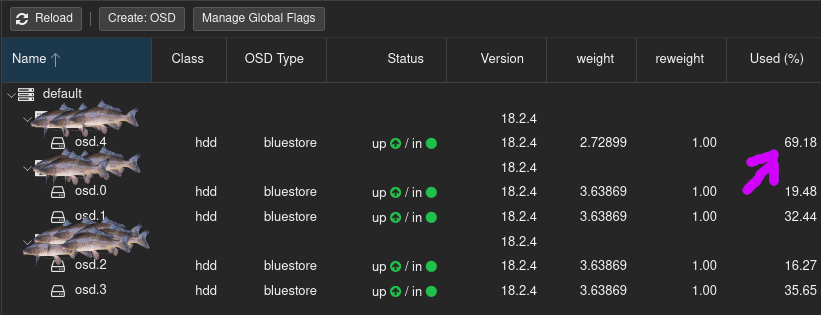

20241112, Day 87

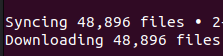

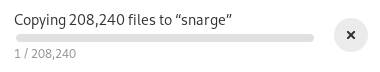

Rehoming an old NAS onto Ceph and it’s making me wait

Does not help that the whole operation is happening at the write speed of a single cylinder, compounded by the host only having the one OSD which has driven usages past 69% while everything else chills at mid 30’s tops

But wait I’ve got a trick to use with that.

(the prior NAS disks, once their copied off!)

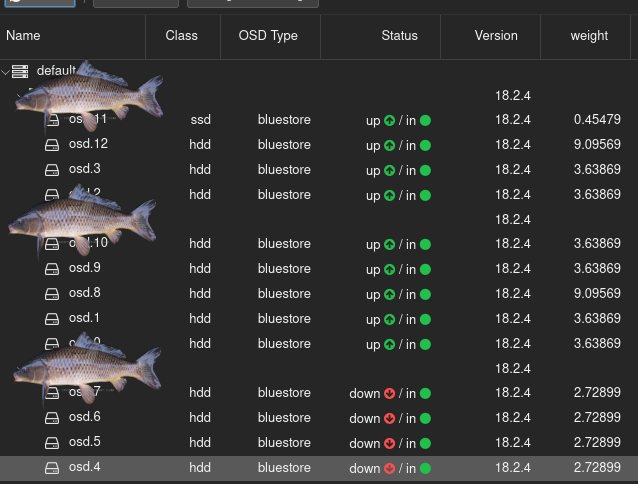

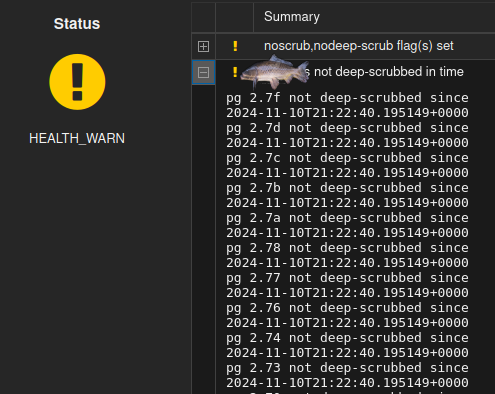

20241111, Day 88

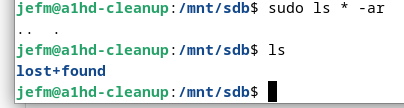

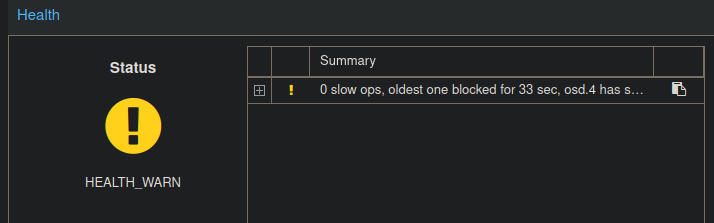

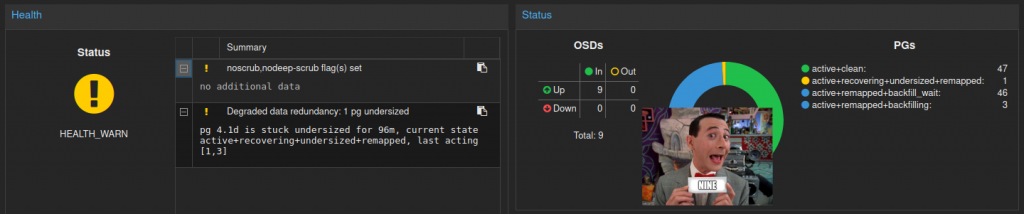

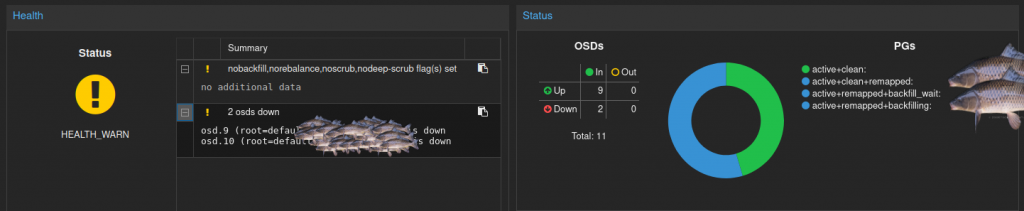

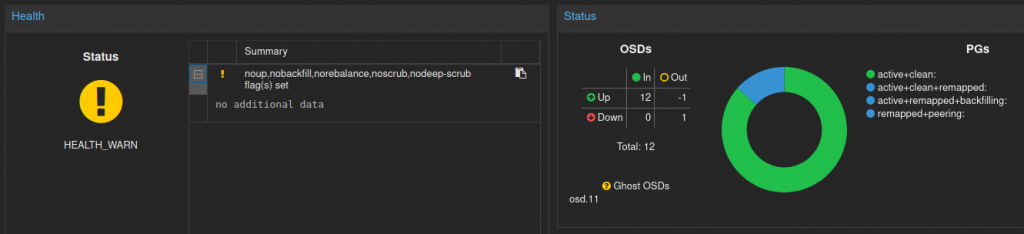

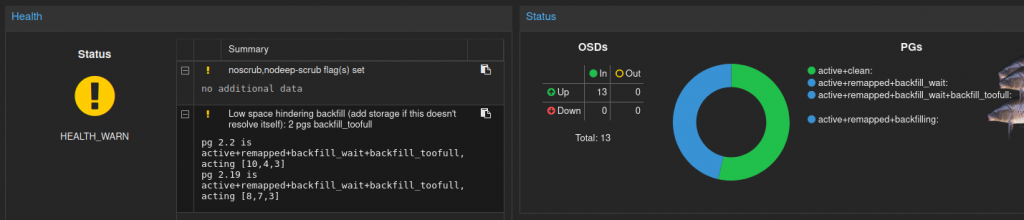

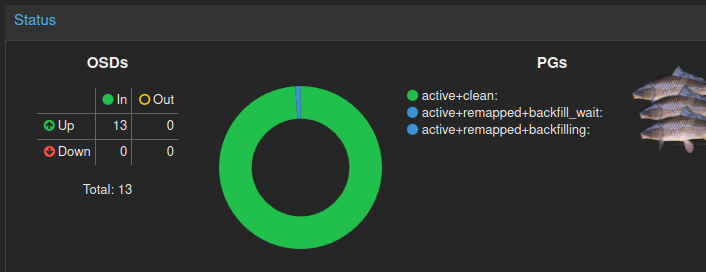

Eight spindles in Ceph cluster day~ Had to clear off and blow up an old dataset.

Adding OSD’s was fun enough

That just added cool alerts for a while

It’s ok, OSD’s are on private mesh net and doing their best

20241114, Day 89

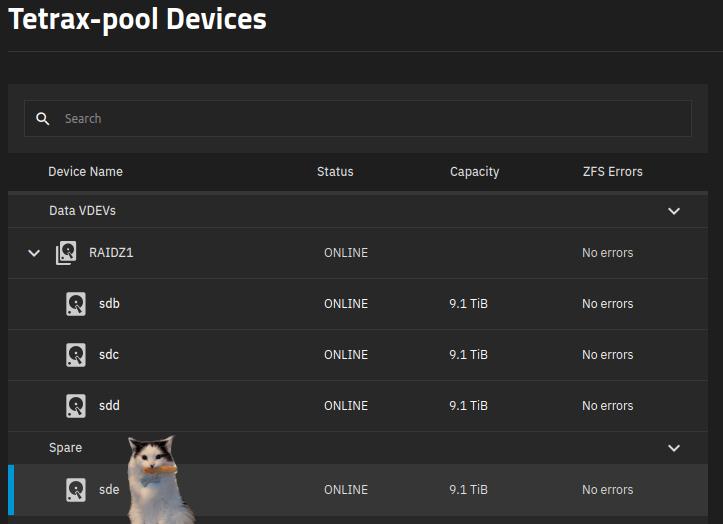

past jefm: i’m smart, i’ll set up a spare drive

present jefm: yoooiiiink

Deconfigured that drive and got it the rest of the NAS up on a Truenas VM. Yes, you can have OSD

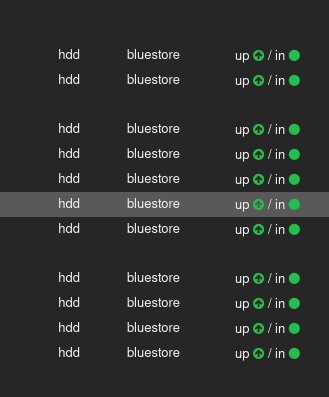

Woo nine spindles~

Getting storage sorted up and going through old NAS’.

Of course it’s rebalancing so everything is busy and under-resourced

Also migrating vm’s from zfs to rados, and it’s slowing everything down too much

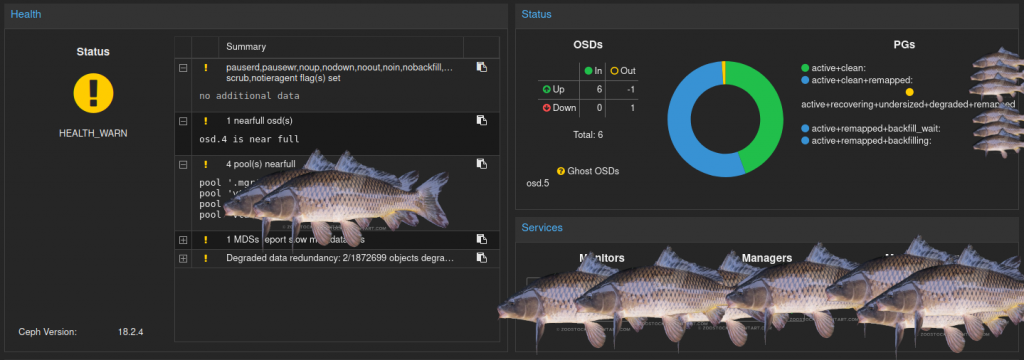

20241115, Day 90

Freed up more spindles by emptying a zfs

They’d better kiss their bass goodbye

Yay, out of sync again

Eleven spindles

20241116, Day 91

Today is Caturday

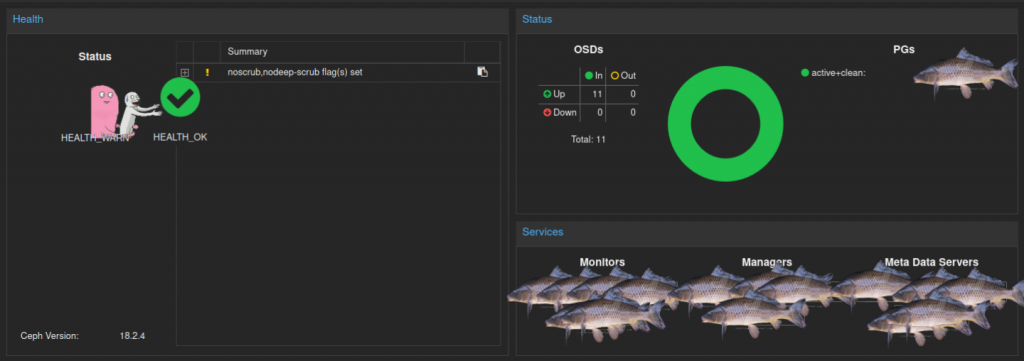

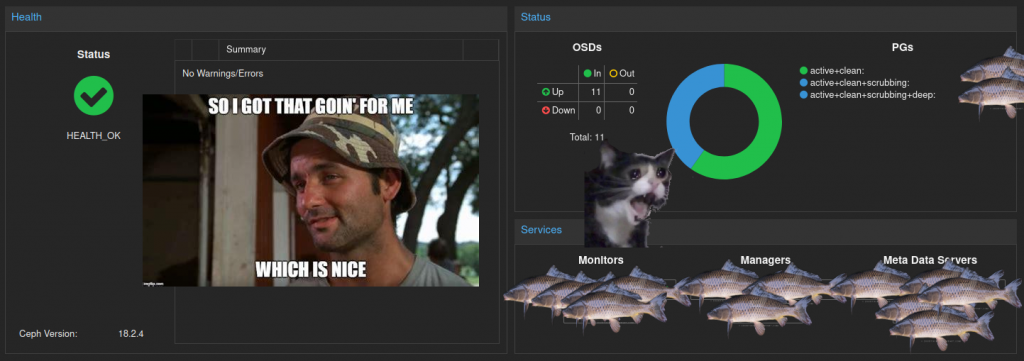

Pool finally balanced out, so all in the clear right?

No, have to re-enable scrubs. Clears error board which is a nice thing to have going for you but now it has to work more

Instead of piping through NFS thought i’d be smart and have Proxmox do it. It did grab the zpool but i then realized there’s no real way for the vm to easily see that as files so i just gave teh hba’s to a vm that’s mounted the Ceph and it got the zpool finally started on a copy roflolol

20241117, Day 92

Added first SSD OSD

Moved a stripe onto Ceph and incorporated that drive

Great: Biggest and smallest OSD on the same host. Not recommended…

Rest of the day messing with rsync for more migrations and backups. And, we’re back in the blue…

20241118, Day 93

Messing around with dedupe tools since I’ve loaded up the pool with a bunch of redundant stuff. What’s there to think about?

GUI options are generally snap these days and had a problem I didn’t bother figuring regarding accessing mountpoints.

Multiple CLI options, and can run on a cool workstation?

yes: because it’s Ceph there’s not some NAS central bottleneck, requests are served over all OSD’s

no: running on a workstation would not take advantage of the fast private network.

So. Check the dupes on the hypervisor then, also get advantage of ram and processors. That’s dirty but part of homesteading.

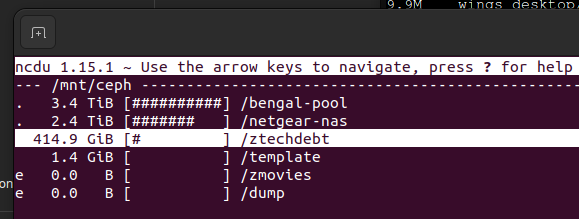

20241119, Day 94

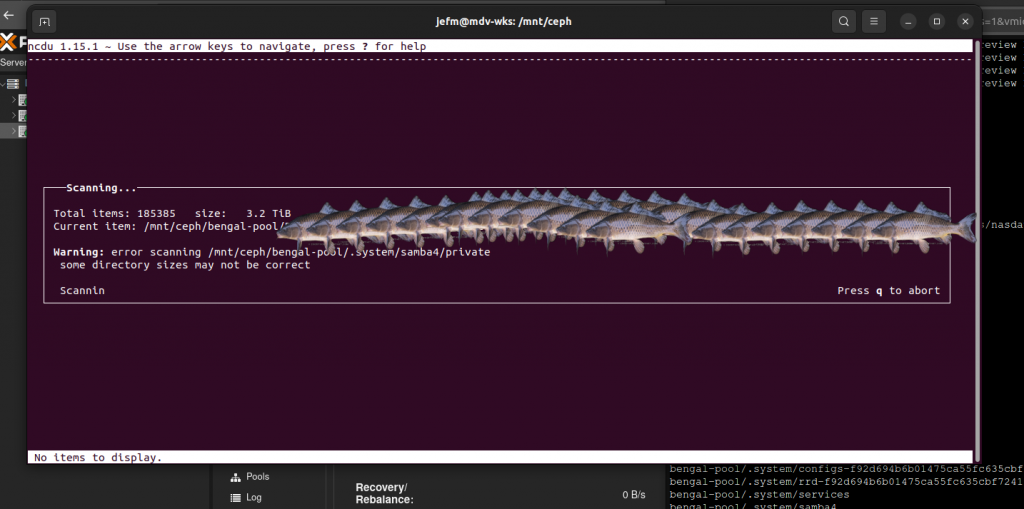

Pumped breaks on copying things into Ceph. Need to take a different tack since the storage is getting too bogged down and filled up. I didn’t buy a single hard drive for this adventure so the OSD layout is what I had on hand and I need to pause and appreciate it hasn’t had some big problem. (yet)

Never be afraid to pause and have a think.

Playing around more with rsync, dedupe tools and a new friend ncdu which is lightning in CLI and installs where GUIs fear to tread.

20241120, Day 95

Shut down a host to swing in another old NAS…

Didn’t take long til i found out

What people mean by down & out

Caught in a bad romance…

(bit of a one-sided relationship imo)

Mounted zpool and copied off.

20241121, Day 96

Got the /mdv zpool copied off and rsync confirm. Still did an fdupe, and just let it delete the zpool copy. Confirm and delete, Two steps at once.

Bit of a phone-in today but had doctor stuff to deal with.

20241122, Day 97

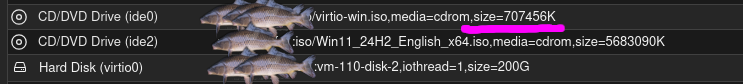

What better way to spend a sick day than installing this sick OS???

Windows is great

It needs 707MB of helper drivers just to connect to a hard drive

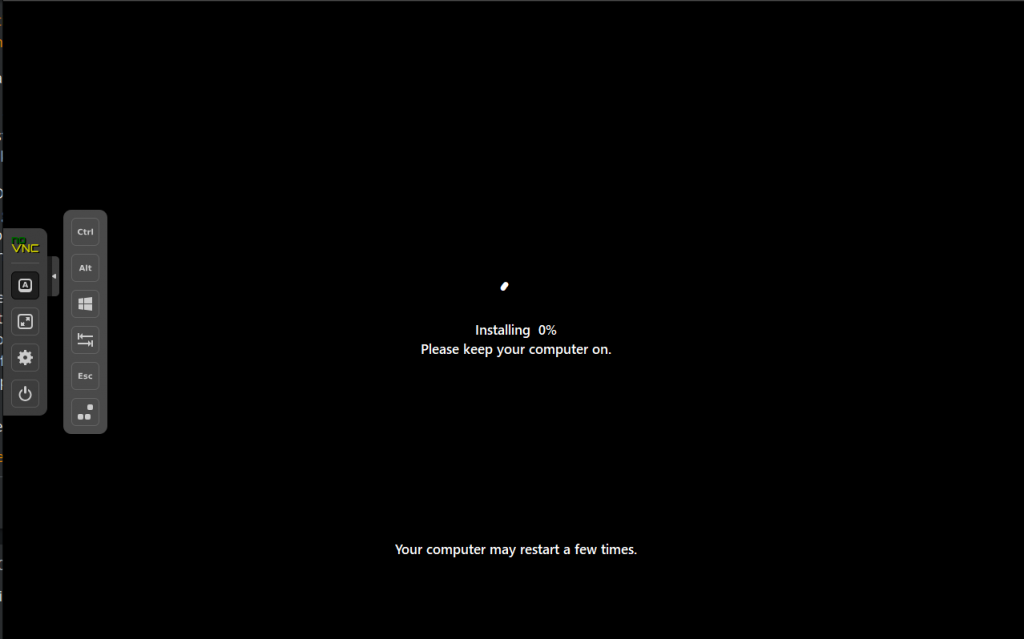

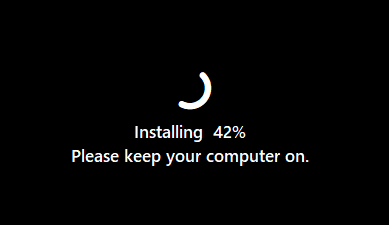

Oh my

Taking a while

Did i get a lot older just then?

This is rough. Say what you want about my homelab, but if this were an Ubuntu VM, we’d be installed, on LiberOffice, running GIMP and cutting memes by now. Might have to see how it’s worked out tomorrow.

20241123, Day 98

Today is Caturday

Finished the W11 install

After a while

Ceph pool almost converged….

And then it was

Which is a good thing, because…. >_>

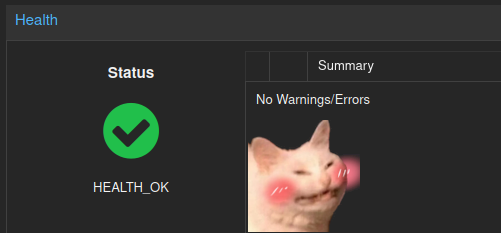

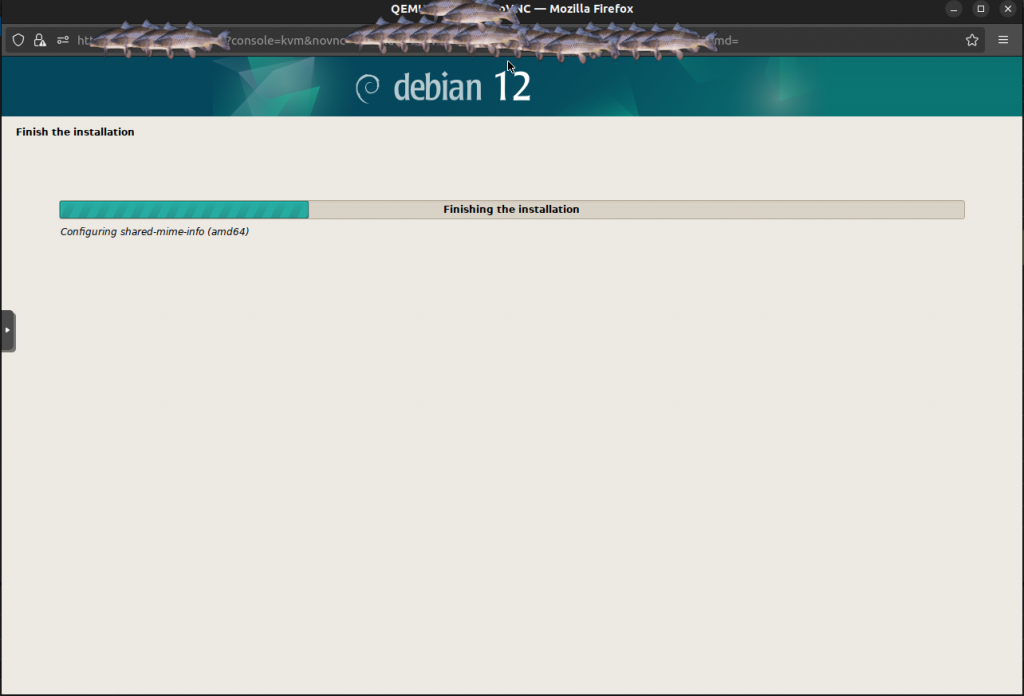

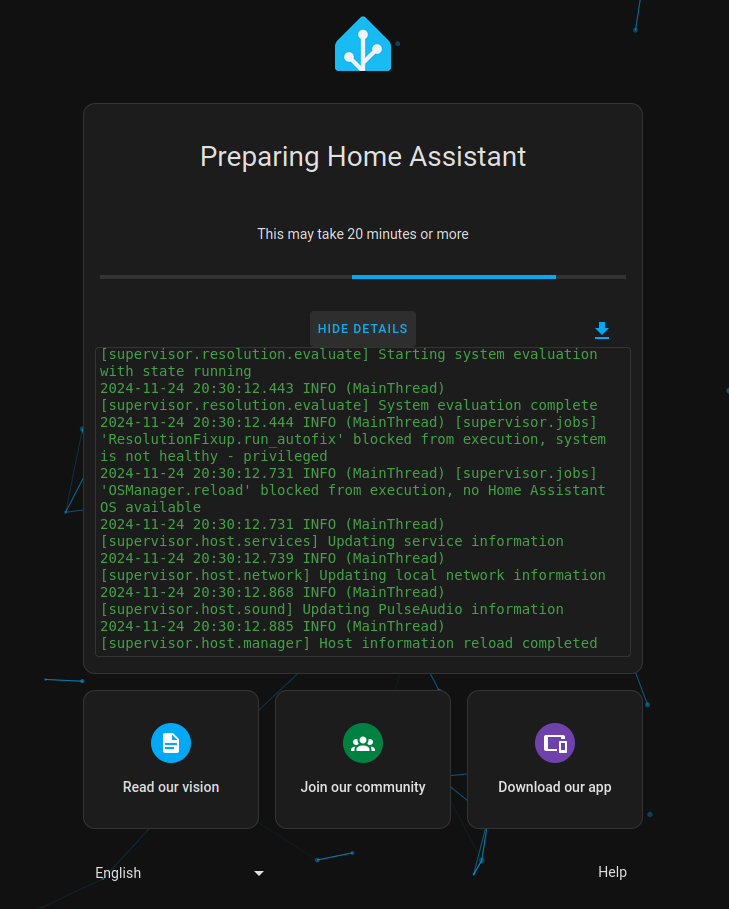

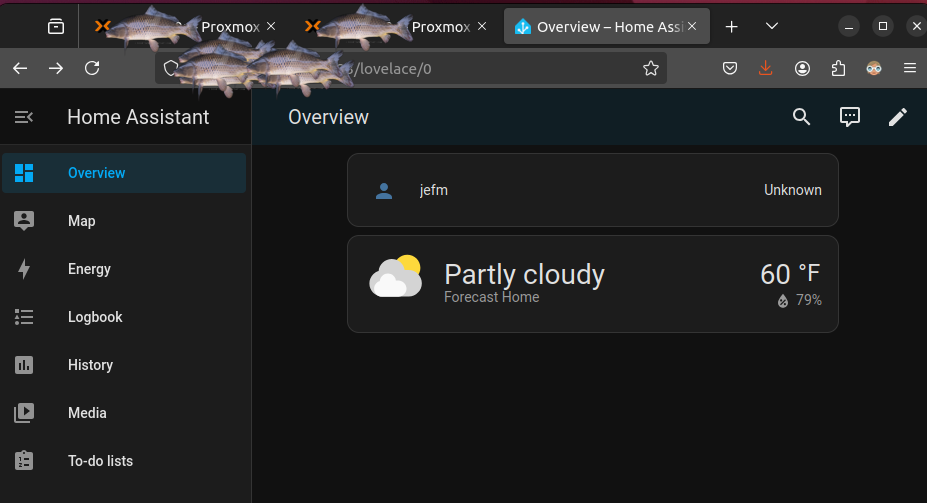

20241124, Day 99

Ceph reached full convergence *^_^* No global flags or anything either

Celebrate by installing Debian 12 real quick…

Including Docker and all the snag….

Welcome home

all it does is show the weather for now XD

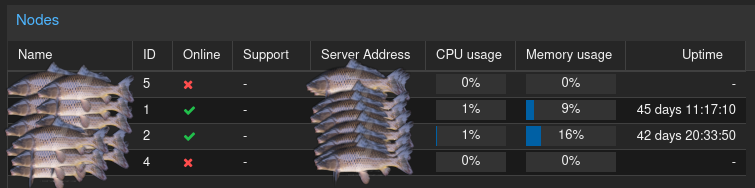

20241125, Day 100

Phased out and shut down my prior Proxmox cluster. It was down to two machines…

… just whirring away, for over forty days

Testing out more dedup tools. Getting good at setting up tests and discover what will/not do what i need.

100 days! I did the thing.